Leverage Dockerized Headless Testcafe Automation Scripts to measure the Reliability of Applications

- Keet Malin Sugathadasa

- Jan 28, 2021

- 6 min read

Every industry solution we see out there today defines a certain level of user experience to all its users. The most appropriate way of measuring the user experience of an application is to feel its functionalities from an external point of view, by mimicking the actual user's behaviour. This is why we do have Quality Engineers to make sure that the application is tested from a "Real User's" perspective to see whether the User Experience is as expected, before releasing the application into production. When it comes to UI (User Interface) Automation, it seems like it's the primary responsibility of a Quality Engineer and not of a Software Engineer. That may be true because UI automation scripts have helped Quality Engineers to test the application like a real user and look for bugs and unexpected behaviour. But, I would like to show another aspect of UI Automation scripts from a developer's perspective, where continuous UI automation can actually tell us whether a Production application is working as expected in Real-Time.

When Quality Engineers use UI automation, it's more of a Readiness check before pushing new features into production. But when a Site Reliability Engineer uses such an approach, this is to ensure that the application is running reliably and providing the expected user experience to all its users, at all times. In one of my previous articles, I explained to you the role of a Site Reliability Engineer (SRE). In this article, I would like to show you how an SRE can leverage UI Automation Scripts to make sure that an application is running reliably at all times. I will also be showing you how to run these UI Automation scripts in a Dockerized environment. (Something that I was struggling to do, for quite some time).

Let me break this article down into the following sections.

How UI Automation can be used to measure the reliability of applications

Writing a simple UI Automation Script via Testcafe

Running a UI Automation script on a GUI vs Headless

Dockerizing your UI Automation Script

Using UI Automation to Measure the Reliability of Applications

There are multiple ways to measure the reliability of a production application. This can be defined in different ways, based on the deployment structure, purpose of the application, and even the type of expected users. Some of the common ways of measuring the reliability of applications are given below.

Pinging app URLs to see the liveliness

The ratio of success to failure API calls from the application to the backends

Browser Javascript errors

Load time of Pages

Injecting Chaos and verifying that applications can function in various conditions like Network downtime etc.

Nevertheless, every application has its own important pages and user flows, that require careful attention to ensure that applications work reliably and that they provide the expected User Experience (UX) to its users. Aspects like whether the user can successfully log in, or whether the user can successfully add items to a cart and checkout, are user flows that are crucial, which are also highly dependant on many other components of the application ecosystem. Yes, it's true that Quality Engineers verify this before deployment, but what if some dependency breaks in a real production environment. There will be no other way to verify this unless someone files a support ticket for this.

This is where Site Reliability Engineers, mimick the user's behaviour in these applications, in a periodic job, which will periodically check whether these major flows function as expected. This is where UI Automation can be leveraged to automatically and periodically measure the reliability of certain parts of the application. Sometimes, with this approach, you will notice that behavior change, with user load, network latencies, backend failures, etc. Even though this is synthetic data, this ensures that certain aspects run as expected from a user's perspective. Furthermore, to enhance this, you can also run these scripts from different geographies, so that you can notice differences from various parts of the world.

Once you get the initial scripts and the structure ready, all you have to do is run these scripts in different environments which will give you the required metrics at the end of the process.

One hard decision here would be to figure out the major user flows which need to monitor. Due to practical reasons, you cannot monitor all user flows in an application. For the purpose of reliability, you only need to measure the major user flows. To come to this decision, you will have to speak to different stakeholders and identify the most important use cases or user flows of the applications.

Writing a simple UI automation script using Testcafe

Testcafe is an Application UI Automation framework, which runs on all popular environments. TestCafe runs on Windows, macOS, and Linux. It supports desktop, mobile, remote, and cloud browsers (UI or headless). The other best part about it is that it is completely open-source.

In this section, let's write a simple UI automation script that will run both on your browser in a GUI as well as running it without a GUI (headless).

Please follow the steps given in the below URL, to run your first Testcafe script. Afterward we will build a simple script and run it on our own.

The following script is a simple script that was taken from the above URL. You can write your own script for your own application. Explaining this would be beyond the scope of this article, but if you are really interested the documentation provided by Testcafe is quite efficient.

import { Selector } from 'testcafe';

fixture `Getting Started`

.page `http://devexpress.github.io/testcafe/example`;

test('My first test', async t => {

console.log("Starting to type the developer name")

await t

.typeText('#developer-name', 'Keet Malin')

.wait(2000);

console.log("Hitting th SUBMIT button after entering the developer name")

await t

.click('#submit-button');

console.log("Waiting for the article header to be Thank you, Keet Malin!")

// Use the assertion to check if the actual header text is equal to the expected one

await t.expect(Selector('#article-header').innerText).eql('Thank you, Keet Malin!');

});

To run the above script via Chrome, you can simply use the following command. It will bring up a new Google Chrome browser and show you step by step how it happens. I am using Google Chrome as a simple example in this. You can assume any browser you want. All supported browsers are given here.

testcafe chrome script.jsThis approach is preferred if you really want to see how the test simulation is working. For example, an engineer would be interested in seeing the flow of events.

Now let us talk about running this in the headless mode. Headless mode will not open up a browser, but it will run in a browser and provides you a result. This means, you can simply run this in a place where there is no GUI, like a terminal or SSH, and it will give you the same result.

testcafe chrome:headless script.jsThis approach is preferred when you do not want to see the actual simulation, but you somehow want to see the end result. This is more preferred when you want to run periodic jobs, without the user's intervention. In this article, I would be bringing forward the Headless approach, so that we can dockerize it run it in a containerized environment.

For both the above options, you will see a response like this.

Dockerizing your Testcafe Execution

Now that you have your script ready, it's about running this in a Docker container. Fortunately, Testcafe provides you with a Docker Image with Chrome and Firefox installed.

The following article explains the features of the Docker Image.

TestCafe provides a preconfigured Docker image with Chromium and Firefox installed.

But, let us create our own Dockerfile with this image and prepare it to be run.

FROM testcafe/testcafe

COPY . ./

CMD [ "chromium:headless", "script.js" ]Now run the following command from the same directory as the Dockerfile and build the docker image.

docker build -t testcafe .Now, run the docker image using the following command.

docker run testcafeNow that the docker container is ready, you can simply run this as a Kubernetes Cron Job periodically, to make sure that your UI flow is working as expected.

Make sure that you have a proper reporting mechanism applied during failures. I used Slack alerts for this purpose. I had to write the code from scratch, and add it into the docker container.

Key Takeaways

Once again, Testcafe is an application automation framework. The role of a quality engineer is to ensure that the quality of the application is tested before it is sent to production. Ensuring the reliability of the application throughout is actually the role of a Site Reliability Engineer (SRE).

Another point to make note of is, that this is all synthetic data. It might not represent an actual user's problems. But this can represent problems in general that occur due to the Application's ecosystem.

In this article, I just explained how to use this to validate some of the major flows. With this synthetic monitoring, you can also measure the page load times, to gather a lot of data on latencies between each page, latencies to load data, and even latencies of components that take time to render on the browser. With this, you will be able to define Service Level Objectives (SLOs) for applications too. Defining SLOs for applications is a separate topic and will not be discussed here.

The reason for me to highlight the word docker, is that it can be easily run in Kubernetes, as a corn job for periodically monitoring the user flows. As an SRE, you can also think of other ways to run this, but it all depends on the infrastructure you use in your organization.

A simple cronjob manifest for Kubernetes is given below.

apiVersion: batch/v1

kind: Job

metadata:

name: simple-test

spec:

backoffLimit: 3

template:

spec:

containers:

- image: <IMAGE_NAME>

name: simple-test

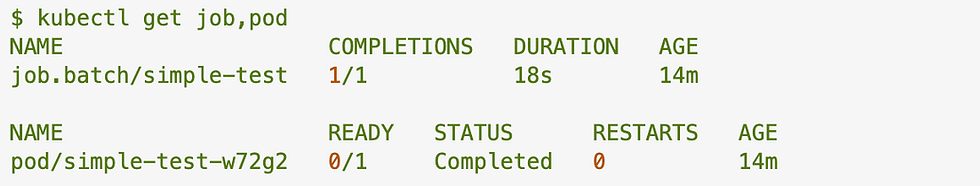

restartPolicy: NeverYou can also see the successful completion of a job.

Comments